Motionoid II is an experimental project that combines computer design, motion capture and fabrication. The “motion” exists for various movements triggered by the body, the environment, etc. The word ending “-oid” means similar and represents the result as a translation of the combined outcome of different actions.

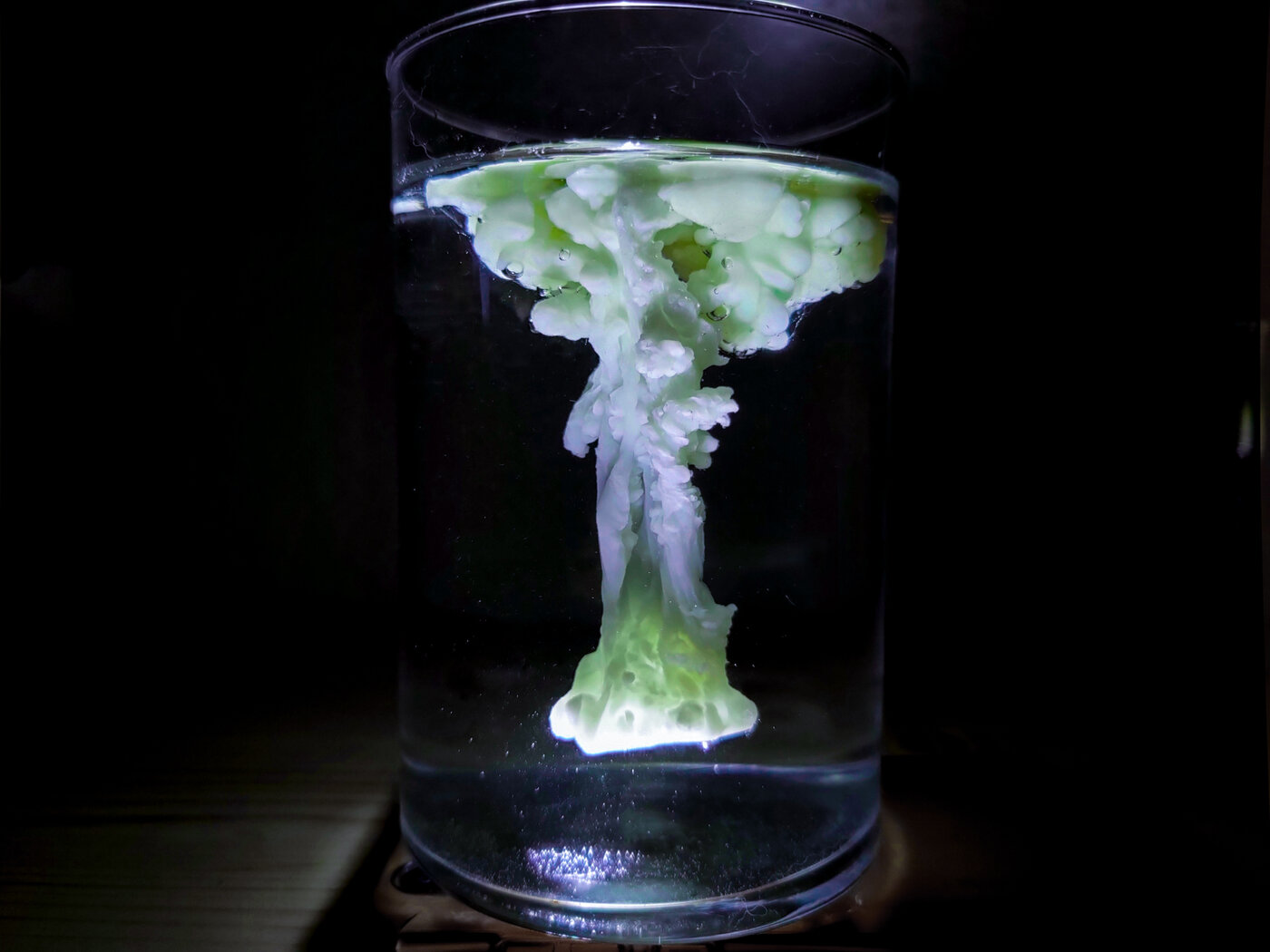

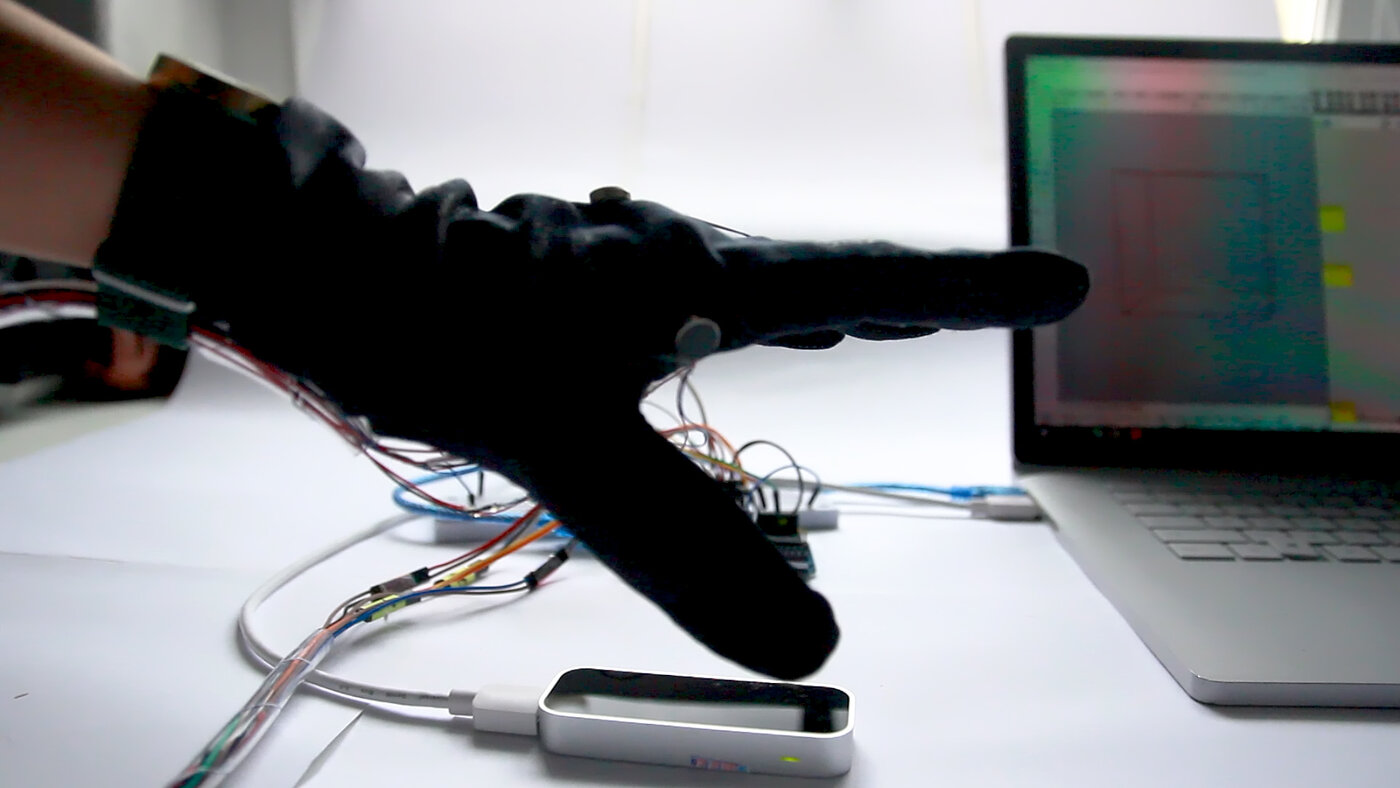

This project is a continuation of the previous semester’s project that used Rhino and Grasshopper as a platform to connect and control a variety of hardware to explore a new way of operating fabrication that incorporates sensory experiences. The project used Leap Motion as the input hardware for gesture recognition, with gesture recognition at the core of the practice. The final materiality exercise was done with water and melted paraffin, a stylistic implementation that was quick and surprisingly effective.

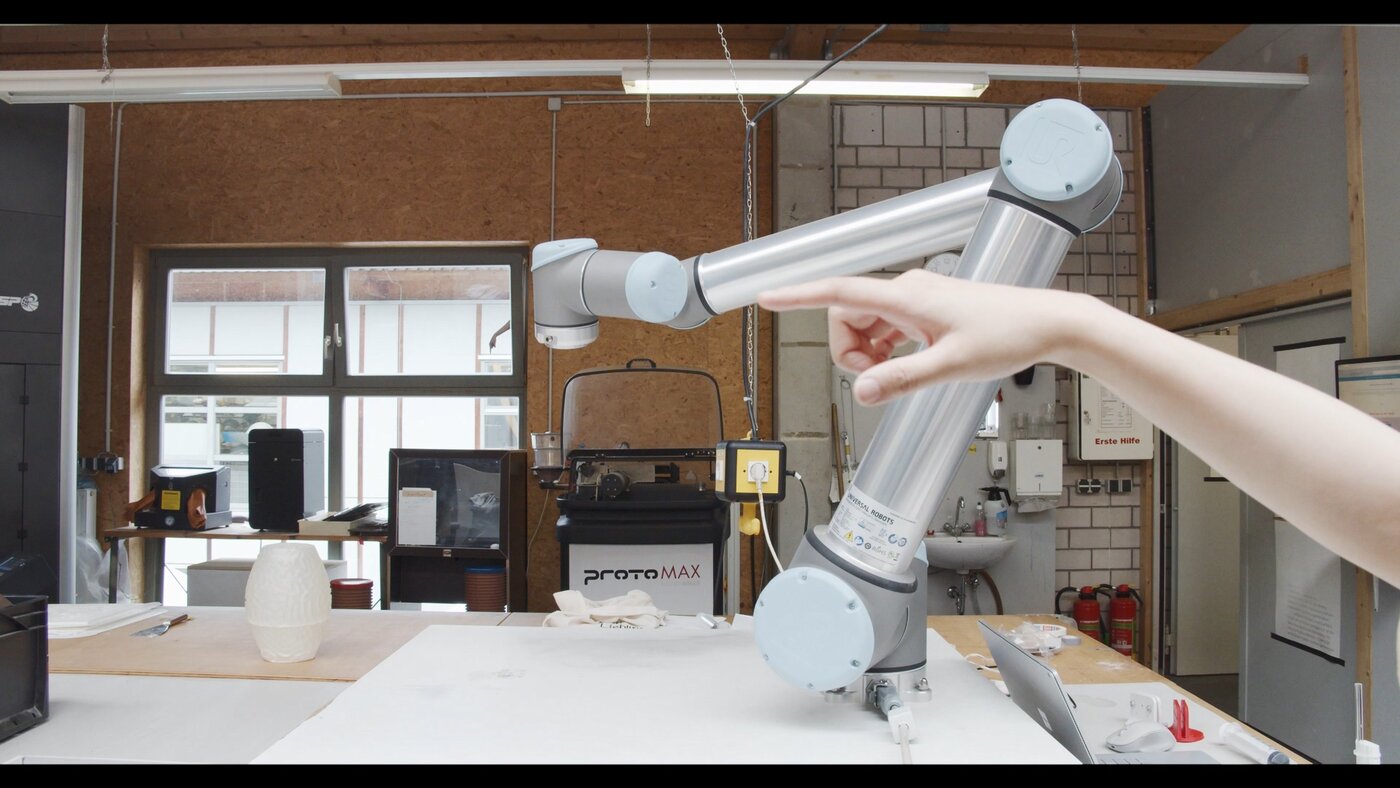

The practical question posed by this project is: “How does a human feel with a robotic arm?” The aim is to create an enhanced feedback system through which the user can control the operation of a robotic arm and, at the same time, feel its relationship to the space and environment in which it operates, thus controlling it. At the same time, a design issue can be addressed, namely, “How does design influence our behavior, and conversely, how does our behavior influence design?” This “human-arm-space” relationship can be spatial-geometric, simulating a physical environment, or artificially set up, with different feedback relationships simultaneously influencing the operator’s behavior in reverse, producing different outcomes.

The end result of this material experiment is a paraffin mold, which is much faster than 3D printing because it can form large volumes and gives more freedom to the manipulator. Ideally, these paraffin models can be reshaped and recast by the designer, with each piece representing the designer’s specific hand movements and understanding of space.

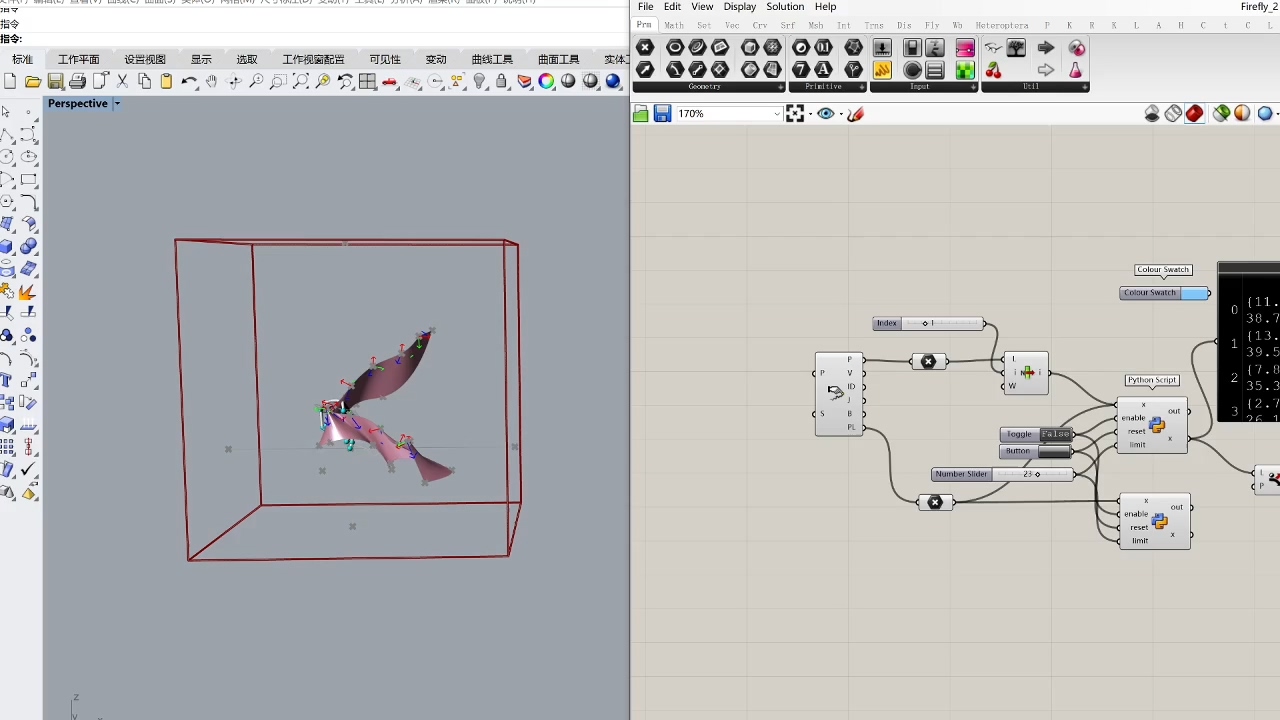

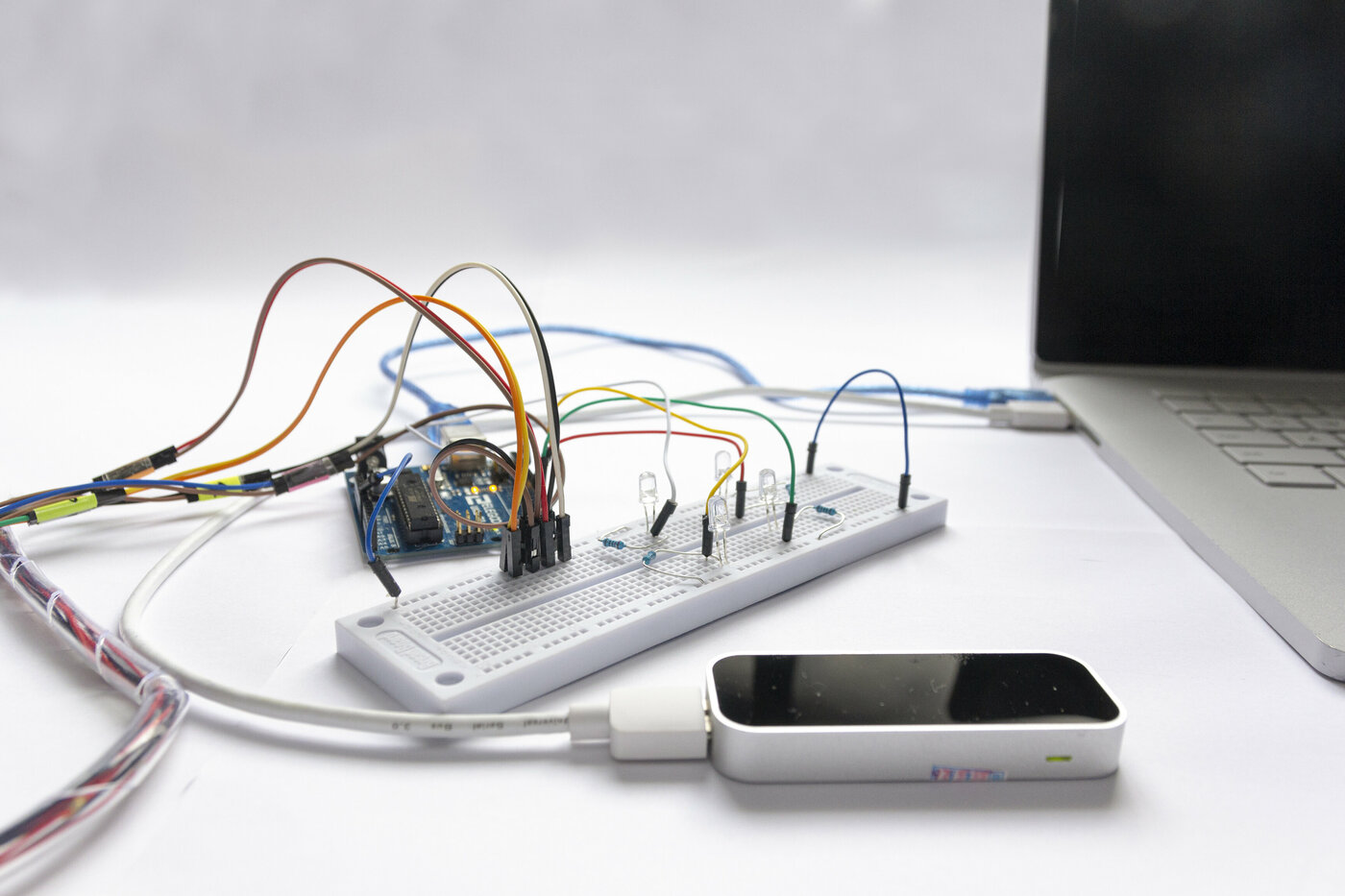

The entire feedback and control system is performed by Rhino/Grasshopper. Leap Motion’s gesture recognition tools can be recognized and recorded through the Firefly plug-in, and Arduino reads and writes can be processed through this platform. The position of the hand bones and intended joints in relation to this virtual space is translated into the PWM data that the Arduino needs to provide light, vibration, and other feedback.

The robotic arm is controlled via COMPAS_FAB, a platform that also provides a control plug-in for Grasshopper, which can be used in Grasshopper once the connection and environment are set up for use. In the current DEMO, this process is simulated by the Data Recorder, which controls the vector direction of the robotic arm from the palm plane.